For my new computer I bought a ATI HD 2600 PRO with a bunch of memory. This card has some really good 3D results, and works well on Linux. But I run into some issues with de xv extension on this board. In fact the driver (the free or binary one) doesn’t seems to support sync on vblank. So when a app try do display datas on the screen, some image destructions appear. This mainly occurs when I’m watching videos but in 3D games too. This is a really stupid bug or mis-feature. How can a serious video programmer can do that ?

After a couple of month, I decided it was enough, I was sick of this dirty stripes on screen. I tested every ATI driver one after one … (ATI opensource drivers have too bad performances to be used on a every days desktop, could you live without google-earth ? ) .. so I decided to go to other side, and bought a Nvidia 8600GT from ASUS. This card perform quite as the ATI in 3D, and have a affordable price. So I switched from ATI to Nvidia.

ATI offers better opensource support, but Nvidia binary driver is really nice to use and have better support today from stuffs like Compiz and Co.. and NO MORE STRIPES !! :)

A couple of weeks ago, I upgraded my Ubuntu Gusty to Hardy. Everything was Ok, since I played with firefox. Some heavy loaded pages (like Amazon, or Gmail) was damn sloowwwww ! Playing with scroll was a source …… grrrrrr ….Firefox on Hardy is 3.0b5. This version has a major “feature” the use a Xrender for the page display. And this looks like Xrender is dawn slow on Nvidia cards .. In fact, Nvidia has already work on this kind of issue before. Without looking forward I decided to run a little benchmark, with the help of friends with Xrenderbenchmark. So here the results.

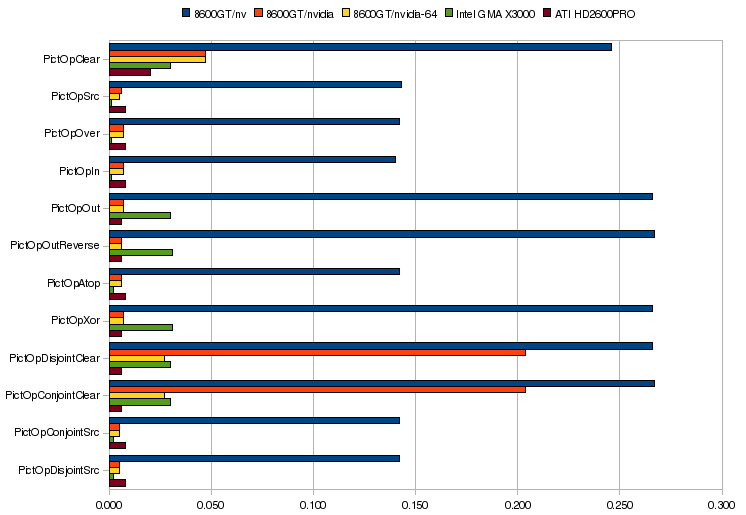

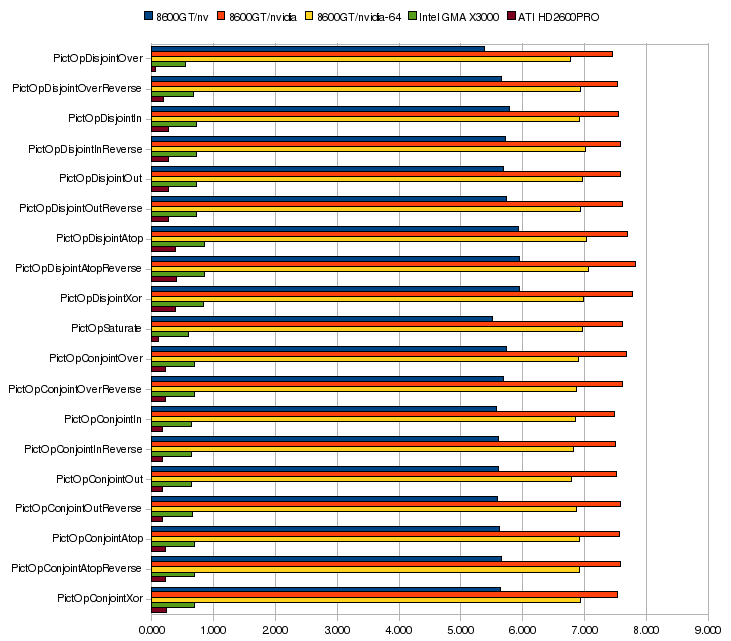

Benchmarks

Benchmarks was done by me and 2 firends, on Q6600 or E6600 Intel CPU running at 2.4Gh, with kernel 2.6.24.1. The graphs only show the Plain results (not Plains + Alpha, or Transformation) but the results are quite the same anyways.

Legend:

- 8600GT/nv : Nividia 8600GT / Xorg 1.4.1git 32 bits / Nvidia GPL driver

- 8600GT/nvidia : Nividia 8600GT / Xorg 1.4.1git 32 bits / Nvidia binary driver ver: 169.12

- 8600GT/nividia-64 : Nvidia 8600GT / Xorg 1.4.1git 64 bits / Nvidia binary driver ver: 169.12

- Intel GMA X300 : Intel GMA 3000 / Xorg 1.4.0.9 64 bits / Intel GPL driver

- ATI HD2600PRO : ATI HD 2600 Pro / Xorg 1.4.1git 64 bits / ATI GPL driver

I split the results into two graphs for convenience.

As you can see on this first part, numbers are really small, the Nvidia GPL driver is the worst : 5 times slower than any other one. Not a good news, and the binary one have some bad results on 2 tests. ATI HD and Nvidia drivers offer quite the same results, but remenber this is the GPL ATI driver ! … The Intel doesn’t have a lot of linearity on this part.

But the next graph give us absolutely different picture !

For every graph, Nvidia drivers (GPL, or binary, 32 or 64 bits) are at least 6 time slower. Intel perform very well, no surprise, this card are damn cool, perfect driver for linux.. but to slow in 3D to really rock. And ATI GPL driver is the clear winner of this benchmarks tests.

As my issue is the Nvidia one, I can comment the results, the GPL driver performs better than the binary one. This is not a big surprise cause, I can see it in Firefox, even it’s slow. There is difference between 64 bits and 32, but I guess this is more kernel related than the driver itself.

I’m not a video guru and only do that figure out what’s going on my computer. I publish in the hope it might help somebody else, and to find help.

Update : The numbers can be found here

Thanks to Ludo and Christian for their help !

Important update : Check the new driver results !!

Obviously, you’re not the only one to complain about that :

http://www.nvnews.net/vbulletin/showthread.php?t=114858

“on Q6600 or E6600 Intel CPU running at 2.4Gh”

Doesn’t this invalidate your results if you’re using 2 different cpu’s to test different systems?

Q6600 and E6600 are both 2.4Ghz Intel core, the main difference is the number of core. They both run at the same speed. And the Graphic drivers doesn’t use the multiple core anyways for this kind of test.

To conclude, the best and worst results behave to the exactly same hardware.

I tested the NVidia on my Q6600, and Chris tested the ATI on the exact same mobo, ram etc etc .. and the same distro (we bought the same hardware, on the same week) ..

And finaly, before somebody ask, I tested the latest 173.XXX Nvidia driver série, and achieve the same (bad) result.

Bye ..

Nice graphs, but what do you mean by “ATI GPL driver”? Is it radeon or radeonhd?

The tested ATI GPL Driver is the radeon (not the radeonhd) because it was’nt included in the ubuntu distro at that time.

Strange, in fact when I had ATI card, with the opensource driver firefox has been lagging very much (compiz enabled). Now I have nvidia card and it works like a lightning.

Of course, for this benchmark we disabled compiz (and AIGLX) …

To comment, Compiz sux on ATI drivers anyway .. so not really strange ..

Well now I know why KDE 4 is so slow on this system with NVIDIA compared to another older with ATI. Just like Firefox 3, KDE 4 also takes advantage of the modern XRender architecture to speed up rendering. Too bad NVIDIA is so bad with support for XRender. And they also have no support for XRandR 1.2.

In fact, the problem should be fixed in the next NVidia driver release, so … we need to wait.

I bought my Dell laptop with a nVidia 8600GT graphic card and I notice that is very slow when I run Firefox 3 in pages that includes flash banners, and with qt4 applications is the same thing. For this reason I can’t use KDE4 yet. I’ll wait for the solution in the next release of nVidia propietary drivers.

Greetings from Argentina.

http://www.nvnews.net/vbulletin/showpost.php?p=1696850&postcount=45

I asked them when the fix will be out…

Nvidia is fixing that, or so I hear, don’t give up hope!. With regards to ATI, they are also making awesome progress in fglrx, it is enormously better than just 6 months ago…XVideo, S3 sleep, and Compiz all work pretty well now on my laptop with X1400.

The next nvidia release. Yes, but for who? Do legacy drivers get to be fixed as well? I’m afraid not!! And i don’t think that so called “legacy” nvidia cards, lack the capabilities of giving a decent 2D working environment

This problem doesn’t exist with pre-G80 (7xxx and lower) NVidia parts. The 8xxx+ cards lack the traditional 2D engine, so you HAVE to use the 3D engine for 2D acceleration with these newer cards. Looks like NVidia doesn’t have such a driver ready — AFAIK the drivers at the moment use software rendering for 2D stuff in this case.

default:

Really ? I’m missing something. The nv forum say this was a bug, and should be fixed in the next release. (I’m currently using the bleeding edge beta – 177 -) and the bug is still in there. I’ve never heard, the 2D engine is missing …

Here are my results from xrenderbenchmark. I created identical graphs so the comparison is easy. The behaviour of the graphics card is similar. In the first graph nvidia is always faster than nv, but in the second graph nvidia is a bit slower than nvidia. The numbers shows that my system (although slower Ahtlon Xp 1800+ and Geforce 4 Ti4600) is faster than that of the benchmark of this blog post. So the problem is more intense in the latest cards, but id DOES exist in legacy drivers as well.

http://img176.imageshack.us/my.php?image=graph1vv2.jpg

http://img169.imageshack.us/my.php?image=graph2sk9.jpg

Do you agree?

from my experience i’d say the problem does not only affect geforce 8xxx/9xxx cards. i’d love to make some benchmarks comparable to yours with my geforce 6200 as i’ve got exactly the same render performance problem with firefox 3.

unfortunately, i upgraded to suse 11.0 a few weeks ago (hoping an upgrade to the most recent system + drivers would fix it… should have known better — never install a “.0″…), with one side effect being none of the available nvidia binary drivers working any longer with my card.

but the firefox 3 rendering already was there with my old configuration (nvidia driver). after the failed sw upgrade i was hoping an upgrade to some gf 8x/9x card could help, but now i’m more about xgrading to ATI. i don’t need compiz, just a decent 2d performance and dual screen support.

Hi Michael

If you have a “old” geforce look closely at the nv driver. From what i know right now, the 6XXX (and before) has a special 2D drivers in. So the nv driver should work quite fine.

About the ATI, I read a couple of people saying they will flip to ATI. Fine, but read carefully the begin of this post. I switched from ATI to NVidia cause the ATI doesn’t support vblank sync, and believe me this is really a ditch if you want to watch movies on this card.

Fucking shitty graphs. Include some fucking units.

To unknow, the units doesn’t really matters, as we only compare cards to cards .. but anyway if you wonder, the unit is “second”… just check out the xrender output to convince yourself.